Photo by Clint Adair on Unsplash

Have you ever written code in more than one place that needs to stay in sync? Perhaps there is a tool in your framework of choice that can generate multiple files from a single source of truth, like T4 templates in the .NET world; perhaps not. Even if there is such a tool, it adds a layer of complexity that is not necessarily easy to grok. If you look at the output files or the template itself, it may not be clear what files are affected or related.

At Khan Academy, we have a linter, written in Python, that is executed whenever we create a new diff for review. It runs across a subset of our files and looks for blocks of text that are marked up with a custom comment format that identifies those blocks as being synchronized with other target blocks. Included in that markup is a checksum of the target block content such that if the target changes, we will get an error from the linter. This is our signal to check if further changes are need and then update the checksums that are invalidated. The only bugbear folks seem to have is that instead of offering an option to auto-fix checksums in need of update, it outputs a perl script that has to be copied and run for that purpose.

Small bugbear aside, this tool is fantastic. It enables us to link code blocks that need to be synchronized and catches when we change them with reasonably low overhead. Though I believe it is hugely useful, it is sadly custom to our codebase. I have long wanted to address that and create an open source version for everyone to use. checksync is that open source version.

🤔 The Requirements

Before writing checksync, I started out with the following requirements:

- It should work with existing marked up code in the Khan Academy codebase; specifically,

- File paths are relative to the project root directory

- Checksums are calculated using Adler-32

- Both

//and#style comments are used to comment the markup tags - Start tag format is:

sync-start:<ID> <CHECKSUM> <TARGET_FILE_PATH> - End tag format is:

sync-end:<ID> - Multiple start tags can exist for the same tag ID but with different target files

- Sync tags are not included in the checksum'd content

- An extra line of blank content is included in the checksum'd content (due to a holdover from an earlier implementation)

.gitignorefiles should be ignored- Additional files can be ignored

- It should be comparably performant to the existing linter

- The linter ran over the entire Khan Academy website codebase in less than 15 seconds

- It should auto-update invalid checksums if asked to do so

- It should output file paths such that editors like Visual Studio Code can open them on the correct line

- It should support more comment styles

- It should generally support any text file

- It should run on Node 8 and above

- Some of our projects are still using Node 8 and I wanted to support those uses

With these requirements in mind, I implemented checksync (and ancesdir, which I ended up needing to ensure project root-relative file paths). By making it compatible with the existing Khan Academy linter, I could leverage the existing Khan Academy codebase to help measure performance and verify that things worked correctly. After a few changes to address various bugs and performance issues, it is still mildly slower than the Python equivalent, but the added features it provides more than make up for that (especially the fact that it is available to folks outside of our organization).

🎉 Check It Out

checksync includes a --help option to get information on usage. I have included the output below to give an overview of usage and the options available to customize how checksync runs.

checksync --help

checksync ✅ 🔗

Checksync uses tags in your files to identify blocks that need to remain

synchronised. It works on any text file as long as it can find the tags.

Tag Format

Each tagged block is identified by one or more sync-start tags and a single

sync-end tag.

The sync-start tags take the form:

<comment> sync-start:<marker_id> <?checksum> <target_file>

The sync-end tags take the form:

<comment> sync-end:<marker_id>

Each marker_idcan have multiple sync-start tags, each with a different

target file, but there must be only one corresponding sync-endtag.

Where:

<comment> is one of the comment tokens provided by the --comment

argument

<marker_id> is the unique identifier for this marker

<checksum> is the expected checksum of the corresponding block in

the target file

<target_file> is the path from your package root to the target file

with a corresponding sync block with the same marker_id

Usage

checksync <arguments> <include_globs>

Where:

<arguments> are the arguments you provide (see below)

<include_globs> are glob patterns for identifying files to check

Arguments

--comments,-c A string containing comma-separated tokens that

indicate the start of lines where tags appear.

Defaults to "//,#".

--dry-run,-n Ignored unless supplied with --update-tags.

--help,-h Outputs this help text.

--ignore,-i A string containing comma-separated globs that identify

files that should not be checked.

--ignore-files A comma-separated list of .gitignore-like files that

provide path patterns to be ignored. These will be

combined with the --ignore globs.

Ignored if --no-ignore-file is present.

Defaults to .gitignore.

--no-ignore-file When true, does not use any ignore file. This is

useful when the default value for --ignore-file is not

wanted.

--root-marker,-m By default, the root directory (used to generate

interpret and generate target paths for sync-start

tags) for your project is determined by the nearest

ancestor directory to the processed files that

contains a package.json file. If you want to

use a different file or directory to identify your

root directory, specify that using this argument.

For example, --root-marker .gitignore would mean

the first ancestor directory containing a

.gitignore file.

--update-tags,-u Updates tags with incorrect target checksums. This

modifies files in place; run with --dry-run to see what

files will change without modifying them.

--verbose More details will be added to the output when this

option is provided. This is useful when determining if

provided glob patterns are applying as expected, for

example.

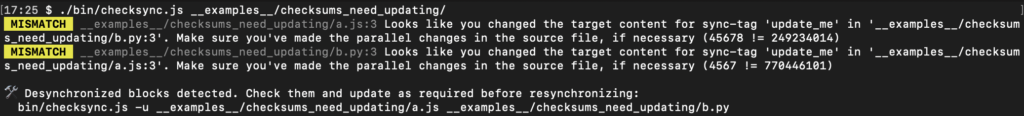

And here is a simple example (taken from the checksync code repository) of running checksync against a directory with two files, using the defaults. The two files are given below to show how they are marked up for use with checksync. In this example, the checksums do not match the tagged content (though you are not expected to know that just by looking at the files – that's what checksync is for).

// This is a a javascript (or similar language) file // sync-start:update_me 45678 __examples__/checksums_need_updating/b.py const someCode = "does a thing"; console.log(someCode); // sync-end:update_me

# Test file in Python style # sync-start:update_me 4567 __examples__/checksums_need_updating/a.js code = 1 # sync-end:update_me

Additional examples that demonstrate various synchronization conditions and error cases can be found in the checksync code repository. To give checksync a try for yourself:

- Install it from the npmjs.com repository:

yarn install checksync - Get the source from github.com/somewhatabstract/checksync and follow the usage instructions.

I hope you find this tool useful, and if you do or you have any questions, please do comment on this blog.