Photo by Sergey Zolkin on Unsplash

This is part 3 of my series on server-side rendering (SSR):

- 🤷🏻♂️ What is server-side rendering (SSR)?

- ✨ Creating A React App

- [You are here] 🎨 Architecting a privacy-aware render server

- 🏗 Creating An Express Server

- 🖥 Our first server-side render

- 🖍 Combining React Client and Render Server for SSR

- ⚡️ Static Router, Static Assets, Serving A Server-side Rendered Site

- 💧 Hydration and Server-side Rendering

- 🦟 Debugging and fixing hydration issues

- 🛑 React Hydration Error Indicator

- 🧑🏾🎨 Render Gateway: A Multi-use Render Server

This week, we are back to our adventures in server-side rendering (SSR). So far in this series, we have looked at:

At this point in our journey into SSR, we need a server. Before we make a server, we must consider privacy and performance so this post is going to be light on code as we talk through important considerations. Though these considerations can be dealt with later, thinking about them upfront will help us avoid some really big pitfalls.

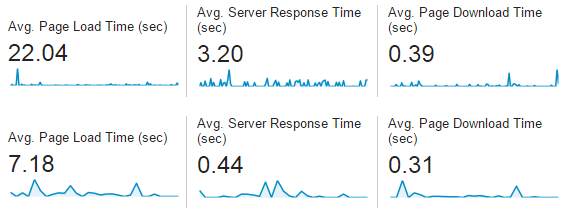

📈 Performance

All our initial considerations regarding server architecture come down to performance. Performance is the reason we want to implement SSR. We want to reduce the time our users must wait to see and interact with our site. Since the render of a page, whether in a browser or in our render server, can take variable amounts of time based on network latency, data requirements, and more, this inevitably means caching. Caching also helps us reduce costs since the render server will do less work. Whether caching is provided by your own implementation or via a service like Fastly, it has implications on what we should render on the server.

- To get the most from our SSR solution, we need to render as much of a page as we possibly can.

- To get the most from our caching, we want to share renders among as large a user base as we can so that a single render provides a cache hit for many people.

These two points compete against one another. In an ideal SSR world, for any given route the entire page is rendered so that the client does as little work as possible, but in an ideal caching world, a single cached entry for a route is shared with every user so that it is rendered once and then just shared. Assuming our app changes the page based on information about the user, both general – what device they are on, whether they are logged in or not, etc., and specific – what their name is, where they live, etc., we only get the most from caching if we do not render differentiating information during SSR such that we can share that render with more users. I doubt there is a hard and fast rule about how to find that caching sweet spot between being too specific about cache keys or being too general about rendering, but we can make things easier for ourselves.

First, we must ensure the cache key only includes generalizations and non-user-specific info.

Second, we introduce means to make generalizations about the user that can be a part of the cache key (is logged in, using Android, etc.) and that we can then pass to the render server to influence what is rendered.

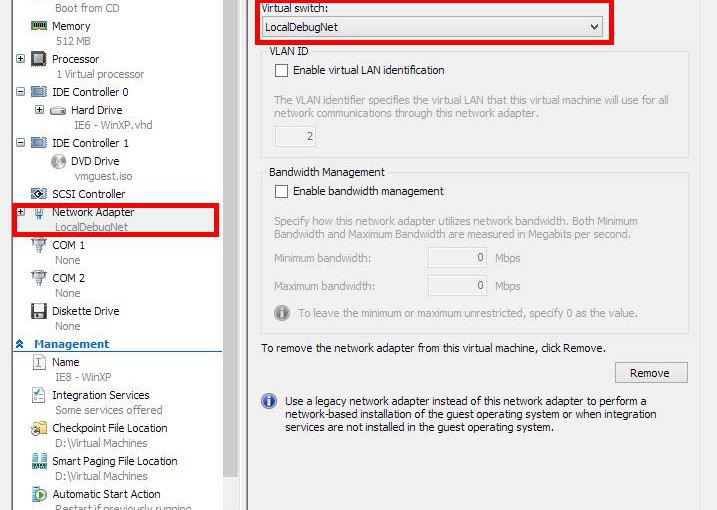

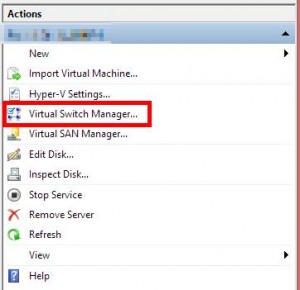

Combining these two pieces of generalizing users and using that to influence the cache keys enables us to maximize what we can render while providing cacheable results that can be shared without leaking personal information. However, since the generalized information must be calculated and it can influence the cache key, there are implications for our architecture; if the render server does the generalization work, how can it tell the cache about it? We will look into that later, but for now, let's just note it as a problem to be solved.

📊 Data Access

At this point, we have worked through how to architect our server to support caching via generalizing users so that cache hits are likely without leaking personal information. That's great, and in fact, one might think, unnecessary. As long as user-specific information is not available to the render server, we cannot possibly render user-specific data. In many apps, data is obtained via REST or GraphQL and as such, is asynchronous. Since our render server is only rendering the very first render of a page, any asynchronous data will not be resolved and therefore not included in the result. So, where's the problem?

Well, not all data is asynchronous; some may be in the request itself, and even if we don't cache based on it, we must still ensure it is not rendered in the result. To mitigate this, we can use frontend components that wrap access to user-specific information so that we have a consistent rendering experience. Whether rendering for the first time in the client or the first time on the render server, these components will ensure the right thing is rendered and that the client will render the real data. Such data access components might render a spinner or some other placeholder that represents the currently unusable personal information (such as username) until the data is available. This means that all the considerations for rendering the right thing, whether in a browser or in the render server, are codified within the React app itself.

By using components in conjunction with linters and tests, we can enforce a data access policy that enables us to SSR effectively while respecting and enforcing user privacy.

📃 In Summary

That's a lot of words. Hopefully it has clarified some of the more jagged edges to SSR that often do not seem apparent until much later. It is much easier to make considerations about these things up front1.

In discussing how our render server will work with respect to a cache and user privacy, we have uncovered some details that affect not only the render server, but also our React app:

- The render server should sit behind a cache for performance, cost, and other benefits

- User-specific data like names, location, etc. should be omitted from the SSR result, cache keys, and initial client-side render

- It is useful to allow generalized user information in SSR, but it must also form part of the cache key

- Using React components, linters, and tests can enforce your policy around rendering user-specific content

That is it for this week. I thought I would get around to implementing the first version of our server, but I did not, so we will get into that next time. In the meantime, here are some things to consider that we will likely address in upcoming posts.

- Given that SSR only performs the very first render of our React app, how can we include asynchronous data and render more of our page?

- How will our render server know what browser code to render?

- How can generalizations about users be included in the cache key and used by the render server?

- something I have painfully learned by not doing so [↩]