Hopefully, those who are regular visitors to this blog1 have noticed a little speed boost of late. That is because I recently spent several days overhauling the appearance and performance with the intent of making the blog less frustrating and a little more professional. However, the outcome of my effort turned out to have other pleasant side effects.

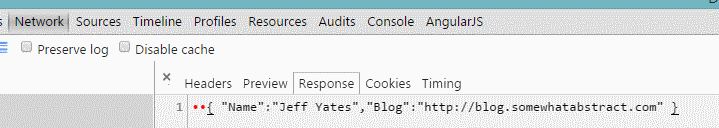

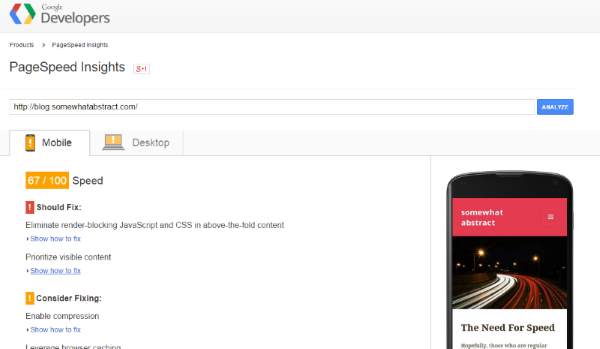

I approached the performance issues as I would when developing software; I used data. In fact, it was data that drove me to look at it in the first place. Like many websites, this site uses Google Analytics, which allows me to poke around the usage of my site, see which of the many topics I have covered are of interest to people, what search terms bring people here (assuming people allow their search terms to be shared), and how the site is performing on various platforms and browsers. One day I happened to notice that my page load speeds, especially on mobile platforms, were pretty bad and that there appeared to be a direct correlation between the speed of pages loading and the likelihood that a visitor to the site would view more than one page before leaving2 . Thankfully, Google provides via their free PageSpeed Insights product, tips on how to improve the site. Armed with these tips, I set out to improve things.

Now, in hindsight, I wish I had been far more methodical and documented every step— it would have made for a great little series of blog entries or at least improved this one —but I did not, so instead, I want to summarise some of the tasks I undertook. Hopefully, this will be a useful overview for others who want to tackle performance on their own sites. The main changes I made can be organized into server configuration, site configuration, and content.

The simplest to resolve from a technical perspective was content, although it remains the last one to be completed mainly due to the time involved. It turns out that I got a little lazy when writing some of my original posts and did not compress images as much as I probably should have. The larger an image file is, the longer it takes to download, and this is only amplified by less powerful mobile devices. For new posts, I have been resolving this as I go by using a tool called PNGGauntlet to compress my images as either JPEG or PNG before uploading them to the site. Sadly, for images already uploaded to the site, I could only find plugins that ran on Apache (my installation of WordPress is on IIS for reasons that I might go into another time), would cost a small fortune to process all the images, or had reviews that implied the plugin might work great or might just corrupt my entire blog. I decided that for now, to leave things as they are and update images manually when I get the opportunity. This means, unfortunately, it will take a while. Thankfully, the server configuration options helped me out a little.

On the server side, there were two things that helped. The first, to ensure that the server compressed content before sending it to the web browser, did not help with the images, but it did greatly reduce the size of the various text files (HTML, CSS, and JavaScript) that get downloaded to render the site. However, the second change made a huge difference for repeat visitors. This was to make sure that the server told the browser how long it could cache content for before it needed to be downloaded again. Doing this ensured that repeat visitors to the site would not need to download all the CSS, JS, images, and other assets on every visit.

With the content and the server configuration modified to improve performance, the next and most important focus was the WordPress site itself. The biggest change was to introduce caching. WordPress generates HTML from PHP code. This takes time, so by caching the HTML it produces, the speed at which pages are available for visitors is greatly increased. A lot of caching solutions for WordPress are developed with Apache deployments in mind. Thankfully, I found that with some special IIS-specific tweaking, WP Super Cache works great3 .

At this point, the site was noticeably quicker and almost all the PageSpeed issues were eliminated. To finish off the rest, I added a few plugins and got rid of one as well. I used the Autoptimize plugin to concatenate, minify, compress, and perform other magic on the HTML, CSS, and JS files (this improved download times just a touch more by reducing the number of files the browser must request, and reducing the size of those files), I added JavaScript to Footer, a plugin that moves JavaScript to after the fold so that the content appears before the JavaScript is loaded, I updated the ad code (from Google) to use their latest asynchronous version, and I removed the social media plugin I was using, which was not only causing poor performance but was also doing some nasty things with cookies.

Along this journey of optimizing my site, I also took the opportunity to tidy up the layout, audit the cookies that are used, improve the way advertisers can target my ads, and add a sitemap generator to improve some of the ways Google (and other search engines) can crawl the site4. In all, it took about five days to get everything up and running in my spare time.

So, was it worth it?

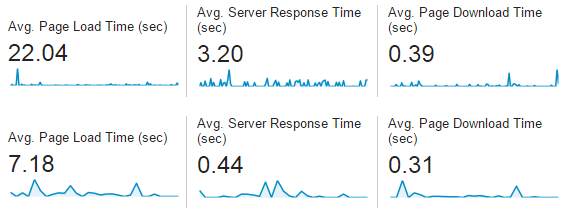

From my perspective, it was definitely worth it (please let me know your perspective in the comments). The image above shows the average page load, server response, and page download times before the changes (from January through April – top row) and after the changes (June – bottom row). While the page download time has only decreased slightly, the other changes show a large improvement. Though I cannot tell for certain what changes were specifically responsible (nor what role, if any, the posts I have been writing have played5 ), I have not only seen the speed improve, but I have also seen roughly a 50-70% increase in visitors (especially from Russia, for some reason), a three-fold increase in ad revenue6, and a small decrease in Bounce Rate, among other changes.

I highly recommend taking the time to look at performance for your own blog. While there are still things that, if addressed, could improve mine (such as hosting on a dedicated server), and there are some things PageSpeed suggested to fix that are outside of my control, I am very pleased with where I am right now. As so many times in my life before, this has led me to the inevitable thought, "what if I had done this sooner?"

- hopefully, there are regular visitors [↩]

- The percentage of visitors that leave after viewing only one page is known as the Bounce Rate [↩]

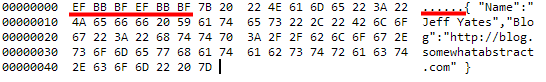

- Provided you don't do things like enable compressing in WP Super Cache and IIS at the same time, for example. This took me a while to understand but the browser is only going to strip away one layer of that compression, so all it sees is garbled nonsense. [↩]

- Some of these things I might blog about another time if there is interest (the cookie audit was an interesting journey of its own). [↩]

- though I possibly could with some deeper use of Google Analytics [↩]

- If that is sustained, I will be able to pay for the hosting of my blog from ad revenue for the first time [↩]