In September, I attended Ann Arbor Give Camp, a local event that connects non-profits with the local developer community to fulfill technological goals. As part of the project I was working on, I installed a plugin called CiviCRM into a WordPress deployment that was running on an IIS-based server.

In September, I attended Ann Arbor Give Camp, a local event that connects non-profits with the local developer community to fulfill technological goals. As part of the project I was working on, I installed a plugin called CiviCRM into a WordPress deployment that was running on an IIS-based server.

It turned out that WordPress integration for CiviCRM was relatively new and a problem unique to IIS-based deployments existed after installation. This led to a white screen when I tried to access CiviCRM. I spent some time troubleshooting and eventually found the issue after I edited two files to track it down. The fix was quickly implemented. Unfortunately, I then discovered that some other features were not working properly.

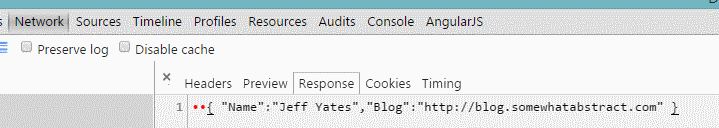

The primary places this new issue surfaced were in displaying dialog windows within CiviCRM. It turned out that these dialogs obtained their UI via an AJAX call that returned some JSON and for some reason, jQuery was indicating that the call failed. Investigating further, I saw that the API call was successful (it returned a 200 status result) and the JSON appeared completely fine. How strange.

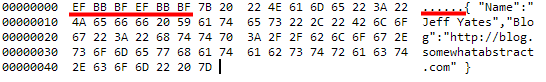

I made some debug changes to the JavaScript using the Google Chrome development tools and looked at the failure method jQuery was calling. In doing so, I discovered jQuery was reporting a parsing error for the JSON result. This seemed bizarre, after all, the JSON looked fine to me. I decided to verify it by copying and pasting it into Sublime. Still, the JSON looked just fine. Being tenacious, I saved the JSON to a text file and then opened it in Visual Studio's binary editor and there, the problem appeared. There were two characters at the start of the file before the first brace: byte order marks.

A byte order mark (often referred to as a BOM) is a Unicode character used to indicate the endianness (byte order) of a text file or stream1. JSON is not supposed to include them at all. In hindsight, I could have seen this issue much sooner if I had paid closer attention to the JSON response in the Network tab of Chrome's developer tools. This view had shown two red dots (see above) before the opening brace, each dot corresponding to a BOM that Chrome knew shouldn't be there. Of course, I had no idea what they meant and so I promptly ignored them. Lesson learned.

So, armed with the knowledge of why the JSON was causing parser errors, I had to find out what was causing this malformation and fix it. After reading about how a BOM in an incorrectly formatted PHP file2 could cause BOMs to be prepended in the PHP output, I started looking at each PHP file that would be touched when generating the API response. Alas, nothing initially stood out. I was getting frustrated when I had an epiphany; I had edited exactly two files in trying to fix the installation issue and there were exactly two BOMs. Coincidence?

I went to the two files that I had edited, downloaded them and discovered they both had BOMs. I re-saved them, this time without a BOM and uploaded them back to the site, which fixed the JSON corruption and got the CiviCRM plug-in in to working order.

In tracking down and fixing this self-made issue, I learned a few valuable lessons:

- Learn to use my developer tools

- Never assume it is not my fault

- It pays to understand how things work

Hopefully, my misfortune in this one incident will help someone track down their own issue with corrupted JSON in WordPress. If so, please share in the comments. Together, our mistakes can be someone else's salvation.