Last week saw the return of Learn Something, a monthly software hacking event held at the offices of Fanzoo Technology in Ann Arbor. Each month, attendees can choose to hack on their own projects or take part in the monthly Learn Something challenge. Teams and pairing are encouraged, providing opportunities to work with new people, tools and techniques.

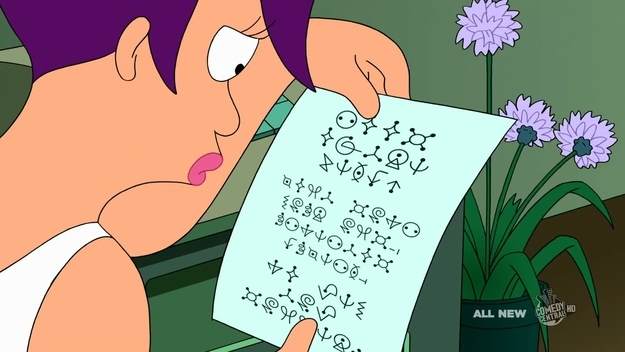

This month the challenge was to decipher a message encoded using a simple substitution cipher. Participants started with a copy of the encoded message (known as the ciphertext) and a text file of words from the English language. The message (shown above) is one example of a cipher, known as 'alienese', that appeared in several episodes of Futurama. Though fans of the show have already solved the cipher and we could have searched the Internet for the solution, our goal at Learn Something was to create a software solution that could solve it (or at least narrow down the possibilities1).

What is a simple substitution cipher? A simple substitution cipher is a way of encoding a message by replacing (substituting) each letter of the alphabet with a different letter or symbol. For example, if we had the substitutions `t` to `a`, `h` to `g`, and `e` to `o`, the word `the` would become `ago`.

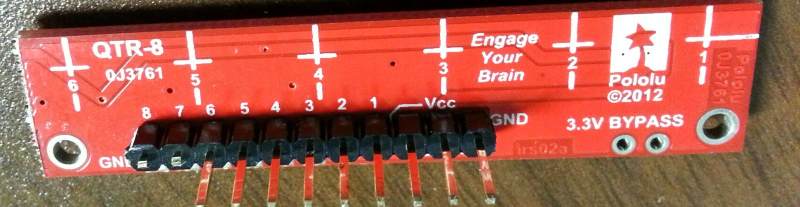

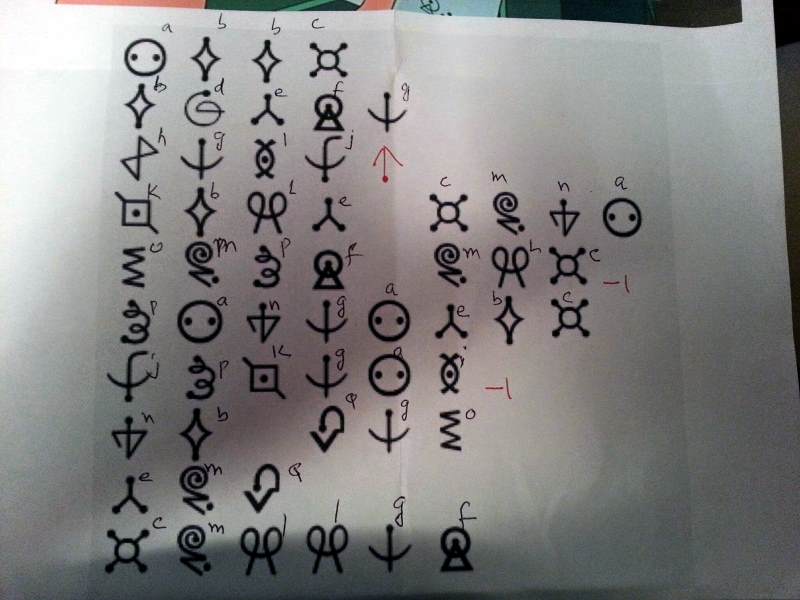

Alex Zolynsky, one of the organizers of Learn Something, provided a clearer print out of the message with punctuation symbols written in red (see below). The intention was to ignore the punctuation and focus on the actual words, so before we started, one of the participants assigned a letter to each symbol such that we had the message written in a more familiar alphabet (and one that was easier to type on our laptops).

The resulting message read:

`abbc bdefg hgij kble cmna ompf mlc pangaebc jpkgai nb qgo emq cmllgf`

Clearly, our work was far from done, but before I continue with this blog, I need to come clean.

At Learn Something last week, I was buried in some work of my own, and other than the occasional interjection, I was not involved in the challenge. However, at the end of the night, I took the cipher home with me and the following evening, I got stuck in. It took me about three hours to crack the message. How did I do it?

Well, there are many ways to tackle breaking a substitution cipher. For example, if the ciphertext is long, we could use frequency analysis to gain some hints at the substitutions2. However, this ciphertext was short and as such, frequency analysis was not particularly helpful3. Instead, I decided to follow the same track as the participants at Learn Something and take the longest word in the phrase then find words in English that had the same pattern and use that to start reducing the possibilities. So, I opened up LINQPad (my tool of choice for hacking around), and got started.

The longest word in the ciphertext is `pangaebc`. To match words that follow the same pattern, I treated the word as its own ciphertext and made another substitution, just as the symbols had been substituted for letters earler. This allowed me to normalize different words to see if they matched the same patter. Only words that normalized to the same string would be of the same pattern. So, for `pangaebc`, the normalized version is `abcdbefg`. Examples of English words that match this pattern are `airfield`, `windiest`, and the gross`pustular`, each of these has the pattern `abcdbefg` when normalized. In fact, in the dictionary I used there were over 500 matches, over 500 possibilities for the word that could be in our decoded message. Each of the possible matches for the longest word provided a possible part of the substitution cipher. The next step was to take another word from the ciphertext and find English words that matched the pattern of that ciphertext word as well as the substitutions found from the first word, `pangaebc`. This started to build up a tree of candidate words for the decoded message. Each node in the tree contained words that match the substitutions required by its parent node but also matched the pattern of a word in the ciphertext. By recursing this approach for each word in the ciphertext sentence, a tree of possible plaintext4 sentences could be generated.

Once all words in the ciphertext had been processed, I could take the branches of the resulting tree that included the same number of nodes as words in the ciphertext and determine the possible substitutions that might give the right plaintext solution. This produced 15 possible sentences. It was then up to me to read each one and pick the one that made the most sense. Of course, I'm not going to spoil it by telling you the solutions I found. Instead, I encourage you to give the challenge a go for yourselves and see what you come up with (we both know you could cheat by searching the Internet for an answer, but you're better than that, right?).

I really enjoyed tackling this problem. Not only was it a fun distraction, but now I have a LINQPad query that can solve any substitution cipher as long as I know what language in which the message is written. I am definitely looking forward to the next time I attend Learn Something. Hopefully, your interest is piqued and I will see you there. In the meantime, if you give this challenge a go, I would love to hear how you tackled it, what you did differently to me, and what you learned. Until next time, thanks for reading.

Featured image: "Confederate cipher disk" by RadioFan (talk) – I (RadioFan (talk)) created this work entirely by myself. Licensed under CC BY-SA 3.0 via Wikipedia.

- to fully solve it programmatically would have needed our software solutions to have understanding of English sentence structure, which was thankfully outside the scope of the challenge [↩]

- assuming we know the language in which it is written [↩]

- I did try it just to see, but frequency analysis needs a longer ciphertext than we had for this challenge [↩]

- decoded text [↩]