Last week, we held our first CareEvolution hackathon of 2015. The turn out was impressive and a wide variety of projects were undertaken, including 3D printed cups, Azure-based machine learning experiments, and Apple WatchKit prototypes. For my first hackathon project of the year, I decided to tinker with writing a bot for Slack. There are many ways to integrate custom functionality into Slack including an extensive API. I decided on writing a bot and working with the associated API because there was an existing NodeJS client wrapper, `slack-client`. Using this client wrapper meant I could get straight to the functionality of my bot rather than getting intimate with the API and JSON payloads.

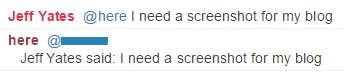

I ended up writing two bots. The first implemented the concept of `@here` that we had liked in HipChat and missed when we transitioned to Slack (they have `@channel`, but that includes offline people). The second implemented a way of querying our support server to get some basic details about our deployments without having to leave the current chat, something that I felt might be useful to our devops team. For this blog, I will concentrate on the simpler and less company-specific first bot, which I named `here-bot`.

The requirement for here-bot is simple:

When a message is sent to `@here` in a channel, notify only online members of the channel, excluding bots and the sender

In an ideal situation, this could be implemented like `@channel` and give users the ability to control how they get notified, but I could not identify an easy way to achieve that inside or outside of a bot (I raised a support request to get it added as a Slack feature). Instead, I felt there were two options:

- Tag users in a message back to the channel from `here-bot`

- Direct message the users from `here-bot` with links back to the channel

I decided on the first option as it was a little simpler.

To begin, I installed the client wrapper using `npm`:

npm install slack-client

The `slack-client` package provides a simple wrapper to the Slack API, making it easy to make a connection and get set up for handling messages. I used their sample code to guide me as I created the basic skeleton of `here-bot`.

var Slack = require('slack-client');

var token = 'MY SUPER SECRET BOT TOKEN';

var slack = new Slack(token, true, true);

slack.on('open', function () {

var channels = Object.keys(slack.channels)

.map(function (k) { return slack.channels[k]; })

.filter(function (c) { return c.is_member; })

.map(function (c) { return c.name; });

var groups = Object.keys(slack.groups)

.map(function (k) { return slack.groups[k]; })

.filter(function (g) { return g.is_open && !g.is_archived; })

.map(function (g) { return g.name; });

console.log('Welcome to Slack. You are ' + slack.self.name + ' of ' + slack.team.name);

if (channels.length > 0) {

console.log('You are in: ' + channels.join(', '));

}

else {

console.log('You are not in any channels.');

}

if (groups.length > 0) {

console.log('As well as: ' + groups.join(', '));

}

});

slack.login();

This code defines a connection to Slack using the token that is assigned to our bot by the bot integration setup on Slack's website. It then sets up a handler for the `open` event, where the groups and channels to which the bot belongs are output to the console. In Slack, I could see the bot reported as being online while the code executed and offline once I stopped execution. As bots go, it was not particularly impressive, but it was amazing how easy it was to get the bot online. The `slack-client` package made it easy to create a connection and iterate the bot's channels and groups, including querying whether the groups were open or archived.

For the next step, I needed to determine when my bot was messaged. It turns out that when a bot is the member of a channel (including direct message), it gets notified on each message entered in that channel. In our client code, we can get these messages using the `message` event.

slack.on('message', function(message) {

var channel = slack.getChannelGroupOrDMByID(message.channel);

var user = slack.getUserByID(message.user);

if (message.type === 'message') {

console.log(channel.name + ':' + user.name + ':' + message.text);

}

});

Using the `slack-client`'s useful helper methods, I turned the message channel and user identifiers into channel and user objects. Then, if the message is a message (it turns out there are other types such as edits and deletions), I send the details of the message to the console.

With my bot now listening to messages, I wanted to determine if a message was written at the bot and should therefore alert the channel users. It turns out that when a message references a user, it actually embeds the user identifier in place of the displayed `@here` text. For example, a message that appears in the Slack message window as:

@here: Anyone know how to write a Slack bot?

Is sent to the `message` event as something like:

<@U099999>: Anyone know how to write a Slack bot?

It turns out that this special code is how a link to a user or channel is embedded into a message. So, armed with this knowledge and knowing that I would want to mention users, I wrote a couple of helper methods: the first to generate a user mention embed code from a user identifier, the second to determine if a message was targeted at a specific user (i.e. that it began with a reference to that user).

var makeMention = function(userId) {

return '<@' + userId + '>';

};

var isDirect = function(userId, messageText) {

var userTag = makeMention(userId);

return messageText &&

messageText.length >= userTag.length &&

messageText.substr(0, userTag.length) === userTag;

};

Using these helpers and the useful `slack.self` property, I could then update the `message` handler to only log messages that were sent directly to here-bot.

slack.on('message', function(message) {

var channel = slack.getChannelGroupOrDMByID(message.channel);

var user = slack.getUserByID(message.user);

if (message.type === 'message' && isDirect(slack.self.id, message.text)) {

console.log(channel.name + ':' + user.name + ':' + message.text);

}

});

The final stage of the bot was to determine who was present in the channel and craft a message back to that channel mentioning those online users. This turned out to be a little trickier than I had anticipated. The `channel` object in `slack-client` provides an array of user identifiers for its members; `channel.members`. This array contains all users present in that channel, whether online or offline, bot or human. To determine details about each user, I would need the user object. However, the details for each Slack user are provided by the `slack.users` property. I needed to join the channel member identifiers with the Slack user details to get a collection of users for the channel. Through a little investigative debugging, I learned that `slack.users` was not an array of user objects, but instead an object where each property name is a user identifier. At this point, I wrote a method to get the online human users for a channel.

var getOnlineHumansForChannel = function(channel) {

if (!channel) return [];

return (channel.members || [])

.map(function(id) { return slack.users[id]; }

.filter(function(u) { return !!u && !u.is_bot && u.presence === 'active'; });

};

Finally, I crafted a message and wrote that message to the channel. In this update of my `message` event handler, I have trimmed the bot's mention from the start of the message before creating an array of user mentions, excluding the user that sent the message. The last step calls `channel.send` to output a message in the channel that mentions all the online users for that channel and repeats the original message text.

slack.on('message', function(message) {

var channel = slack.getChannelGroupOrDMByID(message.channel);

var user = slack.getUserByID(message.user);

if (message.type === 'message' && isDirect(slack.self.id, message.text)) {

var trimmedMessage = message.text.substr(makeMention(slack.self.id).length).trim();

var onlineUsers = getOnlineHumansForChannel(channel)

.filter(function(u) { return u.id != user.id; })

.map(function(u) { return makeMention(u.id); });

channel.send(onlineUsers.join(', ') + '\r\n' + user.real_name + 'said: ' + trimmedMessage);

}

});

Conclusion

My `@here` bot is shown below in its entirety for those that are interested. It was incredibly easy to write thanks to the `slack-client` package, which left me with hackathon time to spare for a more complex bot. I will definitely be using `slack-client` again.

var Slack = require('slack-client');

var token = 'MY SUPER SECRET BOT TOKEN';

var slack = new Slack(token, true, true);

var makeMention = function(userId) {

return '<@' + userId + '>';

};

var isDirect = function(userId, messageText) {

var userTag = makeMention(userId);

return messageText &&

messageText.length >= userTag.length &&

messageText.substr(0, userTag.length) === userTag;

};

var getOnlineHumansForChannel = function(channel) {

if (!channel) return [];

return (channel.members || [])

.map(function(id) { return slack.users[id]; }

.filter(function(u) { return !!u && !u.is_bot && u.presence === 'active'; });

};

slack.on('open', function () {

var channels = Object.keys(slack.channels)

.map(function (k) { return slack.channels[k]; })

.filter(function (c) { return c.is_member; })

.map(function (c) { return c.name; });

var groups = Object.keys(slack.groups)

.map(function (k) { return slack.groups[k]; })

.filter(function (g) { return g.is_open && !g.is_archived; })

.map(function (g) { return g.name; });

console.log('Welcome to Slack. You are ' + slack.self.name + ' of ' + slack.team.name);

if (channels.length > 0) {

console.log('You are in: ' + channels.join(', '));

}

else {

console.log('You are not in any channels.');

}

if (groups.length > 0) {

console.log('As well as: ' + groups.join(', '));

}

});

slack.on('message', function(message) {

var channel = slack.getChannelGroupOrDMByID(message.channel);

var user = slack.getUserByID(message.user);

if (message.type === 'message' && isDirect(slack.self.id, message.text)) {

var trimmedMessage = message.text.substr(makeMention(slack.self.id).length).trim();

var onlineUsers = getOnlineHumansForChannel(channel)

.filter(function(u) { return u.id != user.id; })

.map(function(u) { return makeMention(u.id); });

channel.send(onlineUsers.join(', ') + '\r\n' + user.real_name + 'said: ' + trimmedMessage);

}

});

slack.login();