First, full disclosure. Late last year, I entered a twitter contest being held by the official Microsoft Surface twitter account, @surface. I had to tweet what I would do if I won a Surface 2 with Surface Music Kit. My answer was somewhat throwaway; a spur of the moment thing:

Surface #RemixProject: Incredible music on the go with the Surface Music Kit. No more forgotten, low quality sound bites on a cell phone.

— Jeff Yates (@jefftunes) October 17, 2013

A few weeks later, I received a DM informing me that I had won "something" and to provide my address within five days. I obliged, expecting to get a t-shirt or some other Surface-related swag; I was incredibly surprised when I received a shipping notification one Tuesday that showed a Surface 2 RT with Surface Music Kit would be arriving that afternoon. That said, there was no requirement on my part to provide a review nor that any such review should be unduly positive. With all that known, please read on.

Surface Music Kit

Keyboard

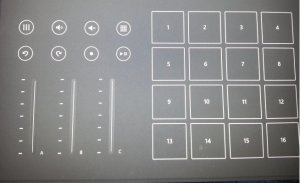

One of the cool things about the Microsoft Surface devices are their covers. The covers (available in touch and type variants) have an integrated input device and are the primary method of providing an external keyboard to a Surface1, though one could also use a Bluetooth or USB keyboard. Most of these covers have an embedded QWERTY keyboard and touchpad, much like a small laptop keyboard. However, the Surface Music Kit includes a special keyboard/cover designed for use with the Surface Music Kit application. This cover has three touch sliders (like electronic volume controls), some playback/record controls like one might find in a multimedia playback device, and a grid of 16 pads. Together, these can be used to mix and manipulate samples in the Surface Music Kit application2.

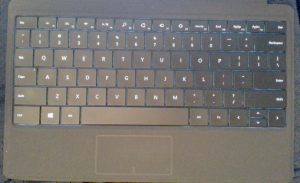

Since the device I won came with the music kit cover, my initial problems stemmed from having to use the onscreen keyboard. As cool as the Surface Music Kit is, I can't type my email password with volume sliders and drum pads. Even though the onscreen keyboard is responsive and has a nice array of layout options (the thumb-friendly layout that splits the keyboard for easy thumb typing is great), I found it a little frustrating to use. Whichever layout I tried, the keyboard occupied too much of the screen, didn't provide the kind of tactile feedback I rely on when typing, and just wasn't fast enough when I wanted to unlock my device. These problems aren't unique to the Surface; I've yet to find any device where the onscreen keyboard doesn't suffer from these problems (though your mileage may vary). Of course, this problem is easily resolved: I received the Type Cover 2 as a Christmas gift from my wife.

Apps

By now, the number of apps in the Windows Store, especially when compared with equivalent Android or Apple stores, has been discussed to death. The Windows Store is getting more apps every day but the choices are limited by comparison with its competitors. That said, all the apps I really wanted such as Netflix, LastPass, and Mouse Without Borders were available and more often than not, other apps had an online equivalent (for example, I can watch Amazon Prime via the website, though it's not all roses when it comes to websites so read on).

The frustrating part of apps running on the Surface RT comes from the desktop. Surface RT devices run a version of Windows built for ARM processors instead of the usual x86/x64 processors that other Windows-based devices use. This means that unless a desktop application has been specifically written to run on this special ARM-based version of Windows (such as Office 2013, which Microsoft provides with the Surface 2 RT) it won't. The outcome of this is that even little apps that you find useful in every day stuff – especially as a developer such as git, node, Sublime, etc. – will not work. I didn't expect to find this as frustrating as I have and it has only been exacerbated with the unnecessary neglect of Internet Explorer by many websites.

Internet Explorer

Internet Explorer 11 is fantastic but also the only option on the Surface 2 RT given that other browsers are not available for the ARM-based platform. In the past, Internet Explorer has had a much-deserved stigma attached to it due to lack of standards compliance, quirky behavior, and poor performance. This has made it difficult for websites to support older versions of IE. However, the Internet Explorer of old is dead and in more recent releases, this stigma is just undeserved dead weight that needs to be cast aside. Unfortunately, this message has either not reached many developers or landed on deaf ears, leading to heavy-handed checks for IE on many sites that block IE users from getting a fully-featured site or interacting with a site at all, even though the site would work if that block were lifted.

As a developer, this has been doubly frustrating. I can't install useful tools like Git or Sublime, nor can I use many online development environments as they don't work or completely block their use from any version of Internet Explorer. This limited browsing experience isn't limited to development tools though; I have found that quite a few sites do not properly support IE. Perhaps one day, Microsoft will convince people that IE should be embraced once more and that its legacy should be left in the past, but until then, users of Surface 2 RT may face a substandard browsing experience.

That said, when sites do work with IE11, they work really well. A standout website for developers has to be Codio. I set this up against my GitHub account and have used it successfully to work on code samples for blog entries. Hopefully more sites begin to support IE like this.

Battery Life and Performance

This is where I should pull up some metrics of how the battery life is for various every day scenarios, but I'm not good at capturing metrics, so I'll tell you my experience. The battery life seems good when using the Surface for general stuff like editing documents, browsing the Internet, etc. The main complaint I have is that I'd like it to have an opt-in auto shutdown if left on sleep for more than a day. While sleep uses very little battery, there are times when my Surface has been sleeping for days on end, resulting in a drained battery. This has led to me shutting down the Surface 2 RT whenever I stop using it, which means I lose out on the "always on"-ness. Although the Surface 2 RT cold boots pretty quickly, the readiness after sleep is significantly faster. That said, I have no substantial complaints; my Surface 2 RT is definitely the most battery-efficient Windows-device I have had so far3.

Other bits and bobs

The two cameras on the Surface 2 RT: one front-facing and one rear-facing, stereo speakers and built-in microphone are all excellent and it was a pleasure using all these to give a Skype tour of our new bathroom to my parents back in England. The built-in kickstand with two viewing angle positions is also fantastic. In addition, the Surface 2 RT has a full-size USB port, 3.5mm headphone jack, mini-HDMI port, and microSD slot.

Service

It is not often that one gets the opportunity to review the quality of device service and repair at the same time as reviewing the device. However, it has been some time since I first received my Surface 2 RT and in that time, I had the misfortune of dropping it on the concrete floor of our basement4. While the Surface 2 RT took the fall with grace, it fumbled the landing, which shattered the screen rendering touch operation impossible.

I followed the instructions on the Surface Online Service Center site to register my device and submit a service request. A broken screen is not covered by the standard warranty, so I paid a $200 fee and got myself a return to print out. I then detached my keyboard and removed the microSD card I had inserted5, and took my broken device to the local FedEx shipment center along with my return information. The packing and shipping of the device was included without any additional charge.

I do not recall just how long the service took, but within a week or two, a refurbished Surface 2 RT arrived on my doorstep. I eagerly unpacked it and turned it on. It sat in a reboot cycle.

I was not happy.

I plugged in the power and tried again. Still stuck in a reboot cycle.

Eventually, I found instructions to do a full factory reset, after which, the device booted but clearly had problems. It was not until after I had downloaded and installed just over one gigabyte of updates that it started to behave properly. It is because of this that I have mixed feelings about the service experience.

On the one hand, it was easy to register my device, submit a service request, and get it shipped. On the other hand, I had to use quite a bit of technical know-how to get the replacement device to work. I feel that if Microsoft wants to win over the non-tech market with these devices, they are going to need to do a lot better. I know for sure that many of my friends and relatives would not have known what to do next if faced with the reboot cycle, probably leading to endless tech support calls to Microsoft, or worse, me.

Conclusion

I have been using my Surface on and off over the last few months. Trying to see where it fits in my life between the eight core desktop PC at home, my core i7 ultrabook at work, and my phone, I was unsure that a tablet/laptop hybrid would work out and to begin with, it did not. However, once I got the QWERTY type cover and discovered which scenarios were useful to me, the Surface 2 RT has been a great addition to my regular arsenal of gadgets6.

The service experience was disappointing. While initially wonderfully easy, it became terribly frustrating once a replacement device was received, especially since I had paid $200 for the privilege. Microsoft needs to pay more attention to detail in this process if it is to become a first class experience.

Overall, I like the Surface 2 RT and will continue to use it (in fact, I have been writing most of this blog using it). However, I have to ask myself if I would have bought one and if I'm honest, I would not. Not because it isn't a great device but because the price tag, like most slates/tablets7, seems high for what you get8. Of course, that also means I wouldn't buy a competitor's device either so read into that what you will.

- both have a track pad, but the type cover has more traditional, plastic backlit keys [↩]

- Unfortunately, there is no SDK for using the Surface Music Kit cover in your own apps, which seems like an oversight to me [↩]

- I currently use a Windows-based Core i7 ultrabook, but have had a Nokia Windows Phone and a variety of Dell laptops [↩]

- It was during a jam session and I was trying to balance it so I could see the lyrics while I played guitar – the Surface was eager to prove that I was stretching myself beyond operating parameters [↩]

- Specific instructions stated that these were not guaranteed to be returned if I left them with the Surface [↩]

- desktop, laptop, Android phone, xbox 360, xbox one [↩]

- delete as applicable [↩]

- clearly, I would've paid $200 for it, considering I was happy to pay that for the repairs [↩]